Our friends at Boom Sound Effects production house have been at it again, and have produced a stunning new sound effects package called Cinematic Expressions and we have the new release on 20% launch offer, until Saturday, May 7th, 2022. Cinematic Expressions specifically extends the range of your trailer sound effects palette, it’s the impact […]

Tag: sound effects

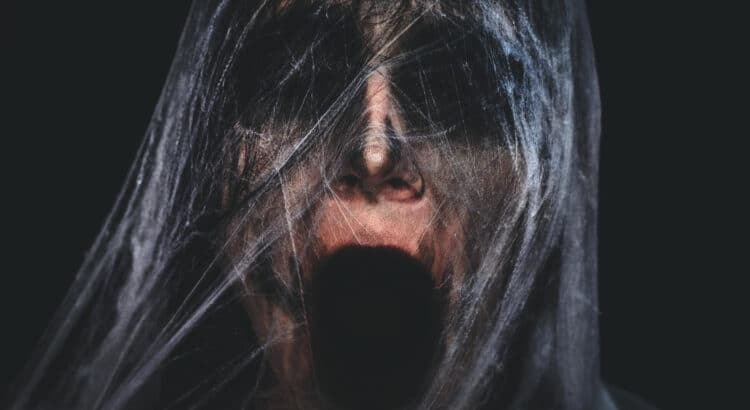

26 new and unique Mystery, Horror & Sci-fi Sound Effects added today

From Lynne Sound FX we have a new package of 26 truly spine chilling Scifi/Horror Background Sounds posted on the site today. These freshly recorded and created, grisly sound effects were created by a combination of human voices, synthesizers, and various effects and techniques to create 26 evil, malevolent, foreboding sounds. These are not short […]

Sound Effects and the Fake Engine Roar

Over the years, the auto industry has increasingly honed their craft at creating environmentally sound cars and reducing unwanted noise levels for the drivers. As a result, the authentic organic engine sounds is masked more and more. For car aficionados who may buy vehicles specifically for the engine roar, this is not necessarily a good […]

Sound Design Founders of the Theatre

1. The Theatre’s Contribution to Sound Design As with any human discipline or industry, sound design as a practice and art form developed collectively over time, spurred on by the contributions of many and the striking visions and passion of leaders in the field. Below are two major contributors to the world of sound design […]

Free sound effects pages back by popular demand

Back in the good old days, when our site Shockwave-Sound.com had the “old style” look and feel, and about half as much content as we have on the site now, we used to have a selection of “Free sound effects” pages. It wasn’t really anything very fancy, just a bunch of pages from which users […]

Harnessing the Power of Sound: Behavior and Invention

Sound is a force of nature that has its own special and unique properties. It can used artistically to create music and soundscapes and is a vital part of human and animal communication, allowing us to develop language and literature, avoid danger, and express emotions. In addition, understanding and harnessing the unique properties of sound […]

Google Close Captions Sound Effects

Google, ever the inventors of new technology and the owners of YouTube.com, have broadened their work into the area of sound effects, specifically through the audio captioning on their YouTube network. Traditionally “closed captions,” which provide text on the screen for those with hearing challenges, provided dialog and narration text from audio. Now, however, Google […]

Shockwave-Sound.com raising the bar for audiophile media producers

As of January 2010, we at Shockwave-Sound.com are pleased to announce that we are introducing a new level of audio quality for royalty free music users. We’re releasing all our new music — and some of our back catalogue — as High Definition, 24-bit WAV audio files. It has been almost 10 years since our […]