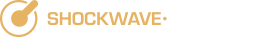

Music Modes Chart and Circle of Fifths by Endorpheus Musical modes are variations of musical scales by moving the tonic (the root note) up or down a number of degrees and beginning the scale from that new starting point, while retaining the same notes of the scale. As with everything human made or discovered, the […]

Tag: author Adam Johnson

Sound Oddities, part 1

1. TV Station’s Viewers Irate over Choice of Music and Sound Effects Scott Schaffer, of ABC’s WNEP affiliate in PA. launches into the topic of sound effects during the segment “Talkback 16” in which the station responds to viewers’ feedback. In this particular segment, the issues defined as “critical topics” some WNEP callers relate to […]

Sound Effects and the Fake Engine Roar

Over the years, the auto industry has increasingly honed their craft at creating environmentally sound cars and reducing unwanted noise levels for the drivers. As a result, the authentic organic engine sounds is masked more and more. For car aficionados who may buy vehicles specifically for the engine roar, this is not necessarily a good […]

Sound Design Founders of the Theatre

1. The Theatre’s Contribution to Sound Design As with any human discipline or industry, sound design as a practice and art form developed collectively over time, spurred on by the contributions of many and the striking visions and passion of leaders in the field. Below are two major contributors to the world of sound design […]

Hans Jenny and The Sound Matrix

Cymatics and the Sound Matrix Humans have long considered sound and music as mystical and magical, whether worshipped by the ancients and embedded in political and culture in ancient China, regarded as a portal to the infinite by Buddhists chanting OM, or modern day musicians and sound designers revelling in and revering their own sound […]

Music of the Minor Modes

The prior post concerned the three major modes which are designated by their major 5ths. The minor modes are similarly designated by their minor 5ths. Each has a unique history and flavor, but they share the familiar minor darkness of emotion in common. From the bittersweetness of the Dorian, the tense power of the Phrygian, […]

The Secret Power of Music: The Modern – Part II

Welcome to Part II of this blog discussion on David Tame’s The Secret Power of Music. Part I explained Tame’s main point of the initial part of his book. Namely, that music among the ancients, that philosophies that can be traced up to the present time, was considered an essential part of the source of […]

Sound and Sculpture: Sound and Architecture

Artists draw inspiration from everything. The entire world around them and the human relationships they have are all sources of experience that provide the meaning they need to express. One powerful source of expression for the visual art is sound and music, the topic of this post. Below are some beautifully intricate creations inspired by […]

The Secret Power of Music: The Ancients – Part 1

The “The Secret Power of Music” by David Tame is a wide ranging work that covers the inherent power of music and its origins in the human story. For anyone who marvels at this phenomenon that we call “music,” this is an excellent read. The book is a wide but deep study of the role […]

Harnessing the Power of Sound: Behavior and Invention

Sound is a force of nature that has its own special and unique properties. It can used artistically to create music and soundscapes and is a vital part of human and animal communication, allowing us to develop language and literature, avoid danger, and express emotions. In addition, understanding and harnessing the unique properties of sound […]