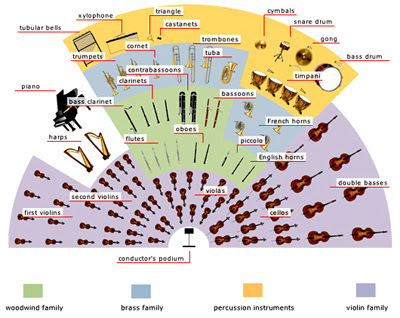

by Piotr Pacyna< Go to part 1 of this article So, how to start? With a plan. First off, I imagine in my head or on a sheet of paper the placing of individual instruments/musicians on the virtual stage and then think how to “re-create” this space in my mix. Typically I’d have three areas: […]

Tag: author piotr pacyna

Depth and space in the mix, Part 1

by Piotr Pacyna “When some things are harder to hear and others very clearly, it gives you an idea of depth.” – Mouse On Mars There are a few things that immediately allows one to distinguish between amateur and professional mix. One of them is depth. Depth relates to the perceived distance from the listener […]

Drum tips for music producers

by Piotr Pacyna Here are a few tips for anyone thinking about spicing up his drum parts. Some of them are for more advanced producers, while some of the others will be suitable for less advanced readers. However, this article is not for beginners. You have to know at least the basics of MIDI programming, […]

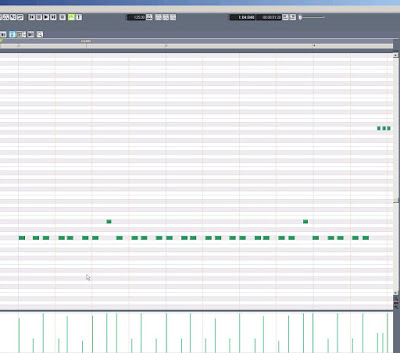

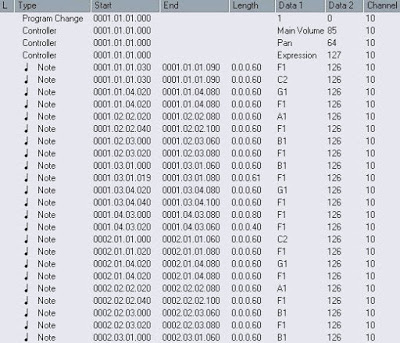

Producing MIDI music for mobile phones / cellphones Part 2

by Piotr PacynaGo back to part 1 of this article 6. Controllers According to the most common opinion one should use only those controllers that are absolutely necessary. Well, it is true, but not quite the whole story. Yes, some old devices are unable to read anything other besides Patch Change and the Volume controller, […]

Producing MIDI music for mobile phones / cellphones, Part 1

By Piotr Pacyna I’ve produced game soundtracks for mobile phones for over 9 years now. When I was starting out I thought that all I had to do was simply to make a MIDI file. A piece of cake! But I was so wrong… The feedback that I was receiving from the clients showed me […]